Credible Science, Incredible Egg

The latest egg nutrition, research and information.

Take a deep dive into

The Facts Behind the Incredible Egg

We’re the science and nutrition education division of the American Egg Board.

Most Popular

Articles

See All >Featured Categories

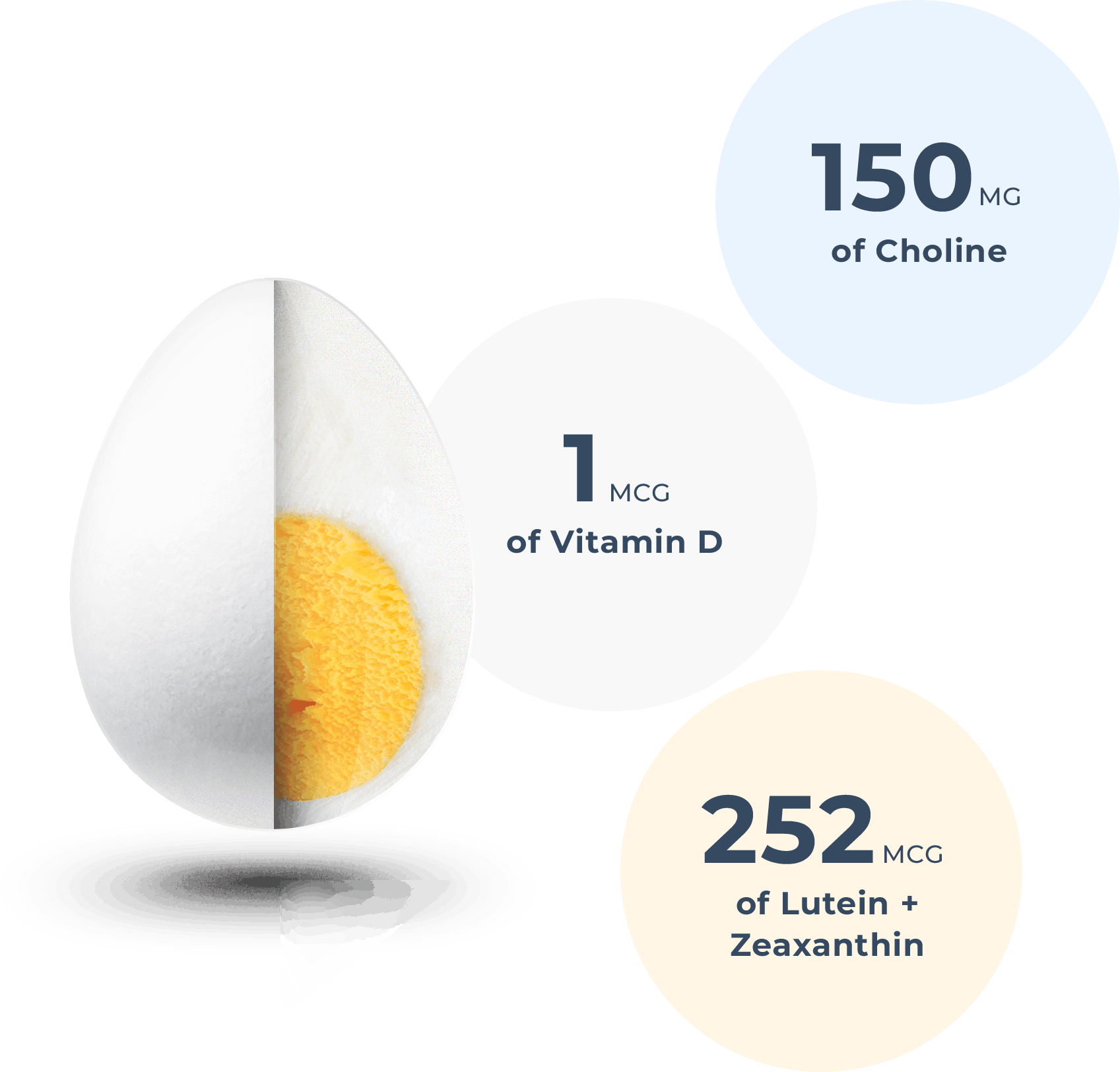

Eggs by the Numbers

Eggs are a nutrient-rich food that provides a good or excellent source of eight essential nutrients. See what else you’ll find in Eggs by the Numbers.

Egg Enthusiast Community

See All >

Veggie, Black Bean, & Egg Quesadilla

Egg quesadillas are one of my fave dishes to make for so many reasons: they’re nutritious, delicious, and affordable! And by adding veggies, we’re getting a great balance of fat, fiber, and protein!

View Recipe titled Veggie, Black Bean, & Egg Quesadilla

Mini Mediterranean Frittatas

Get a taste of the Mediterranean at breakfast with these mini frittatas! Make a batch over the weekend and reheat each morning for a quick and filling breakfast on the go.

View Article titled Mini Mediterranean Frittatas

The Incredible Egg: Cognition, Nutrition & Culinary Hero

Did you know that eggs contain important nutrients for cognition and brain health, including choline and lutein? One large egg contains about 150mg of choline and 252mcg lutein and zeaxanthin. Egg Nutrition Center’s Mickey Rubin, PhD sits down with Melissa Joy Dobbins to chat nutrition, cognition, and all things eggs. Tune into Sound Bites Podcast […]

View Article titled The Incredible Egg: Cognition, Nutrition & Culinary Hero

Avocado Toast Topped With Sunny-Side Egg

Delish #avocadotoast topped with a sunny-side egg hemp seeds, red pepper flakes, lemon olive oil & micro greens

View Social Post titled Avocado Toast Topped With Sunny-Side Egg